Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Elevating Cloud Migration: In-Depth Insights and Evaluations of the Traditional 7-Step Process for Optimal Performance and Strategic Transformation

Authors: Prof. Manisha Patil, Supriya Kamthekar, Shon Gaikwad, Yash Lonkar, Akshaya Survase

DOI Link: https://doi.org/10.22214/ijraset.2025.67035

Certificate: View Certificate

Abstract

Recent advancements in cloud migration technology have introduced an AI-driven approach that simplifies and accelerates the process. This new method reportedly condenses the traditionally complex migration procedure into five main steps, leveraging artificial intelligence and machine learning to automate previously manual tasks. The streamlined process involves assessing migration needs, automating data preparation, optimizing systems for cloud environments, conducting AI-powered testing, and implementing continuous monitoring. Proponents of this approach claim it offers significant benefits, including reduced time and costs and a smoother transition from legacy systems to cloud infrastructure with minimal human intervention. This innovative strategy represents a potential shift in how businesses approach cloud migration, promising to make the process more efficient and accessible.

Introduction

I. INTRODUCTION

Cloud computing has revolutionized the way organizations manage their IT infrastructure, offering unprecedented flexibility, scalability, and cost-efficiency. However, the process of migrating existing systems and applications to the cloud remains a complex and challenging endeavour for many businesses. This study aims to provide a comprehensive examination of cloud migration processes, focusing specifically on the three primary service models: Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). By conducting an in-depth analysis of these models, we seek to identify and elucidate the myriad technical and business challenges that organizations face during migration. These challenges range from technical issues such as system compatibility, data security, and integration complexities, to organizational concerns including cost management, staff training, and change management strategies. Through a systematic investigation of these factors, our research aims to develop a robust and adaptable framework that can guide organizations of various sizes and industries through the intricacies of cloud migration. This framework will address not only the technical aspects of migration but also the organizational and strategic considerations necessary for a successful transition. By providing a holistic approach to cloud adoption, we intend to empower businesses with the knowledge and tools required to make informed decisions, mitigate risks, and optimize the benefits of cloud computing. Ultimately, this research seeks to contribute to the broader understanding of cloud migration strategies and to facilitate smoother, more efficient transitions to cloud-based infrastructures across diverse business environments.

A. Existing Methodology

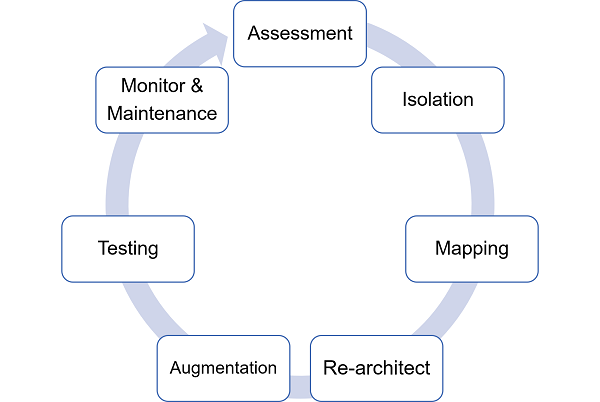

Figure 1 Existing 7 step CRM

The 7-step cloud migration model provides a structured framework to guide organizations through the complex process of transitioning their IT infrastructure and applications to cloud environments.

This comprehensive approach encompasses the following key phases:

- Assessment: This initial phase involves evaluating the current IT landscape, identifying migration goals, and determining which assets are suitable for cloud deployment. Organizations analyze their infrastructure, applications, and business requirements to develop a strategic migration plan.

- Isolation: During this step, components slated for migration are logically or physically separated from the existing IT environment. This segregation helps minimize disruption to ongoing operations and allows for focused migration efforts.

- Mapping: This stage involves creating a detailed blueprint of how the current IT architecture will translate to the cloud environment. It includes identifying application dependencies, data flows, and determining cloud counterparts for existing systems.

- Augmentation: In this phase, applications and systems are prepared for the cloud environment. This may involve modifying applications to ensure compatibility, optimizing performance, and incorporating cloud-specific features to enhance functionality.

- Re-architect: Some applications may require significant redesign to fully leverage cloud capabilities. This step involves restructuring applications to take advantage of cloud-native features, potentially including the adoption of microservices or serverless architectures.

- Testing: Before final deployment, comprehensive testing is conducted to ensure all migrated components function correctly in the cloud environment. This includes performance testing, security assessments, and user acceptance testing.

- Monitor & Maintenance: The final step is an ongoing process that begins post-migration. It involves continuous monitoring of the cloud environment to ensure optimal performance, security, and cost-efficiency, along with regular maintenance and optimizations.

B. Methodologies

This model emphasizes a methodical progression from initial analysis through execution and continuous improvement. By following these steps, organizations can mitigate risks, optimize resource allocation, and maximize the benefits of cloud adoption. The framework addresses common challenges such as compatibility issues, performance optimization, and organizational change management, providing a systematic approach to navigating cloud migration's intricacies. While this 7-step model serves as a valuable guideline, it's important to recognize that each organization's migration journey may require customization based on its unique requirements, existing infrastructure, and specific business goals. The model's structured approach aims to facilitate a smoother transition to cloud-based operations, enabling businesses to harness the full potential of cloud computing while minimizing disruptions to their operations.

1) Paper [1] : VM-based Adaptive Resource Management

The researchers developed an adaptive resource management system for cloud computing environments using virtualization technologies. They designed a resource controller called Adaptive Manager that dynamically adjusts virtualized resources (CPU, memory, I/O) to meet application service level objectives. The system uses feedback control theory, implementing a multi-input, multi-output controller. Each virtual machine contains a sensor module that periodically measures application performance and transmits this data to the Adaptive Manager. The researchers used the Kernel-based Virtual Machine (KVM) as their virtualization platform for testing and implementation. Feedback-based adaptive resource controller using VM sensors and KVM platform.

2) Paper [2] : Live Virtual Machine Migration for Resource Management

This study focused on analyzing live virtual machine migration as a tool for dynamic resource management in cloud computing. The researchers examined three key aspects of VM migration: determining when to migrate, selecting which VM to migrate, and deciding where to migrate it. They reviewed various heuristics and approaches for each of these decisions. The study also discussed the challenges associated with VM migration over both local area networks (LANs) and wide area networks (WANs). The methodology involved a comprehensive literature review and analysis of existing migration techniques. Analysis of VM migration strategies and challenges through literature review.

3) Paper [3] : Multi-Domain Integration Analysis Framework for AI-Enabled Technology Convergence.

The paper employs a comprehensive analytical approach to examine integration challenges across three key technologies - Cloud Computing, IoT, and Software-Defined Networking (SDN) - when combined with Artificial Intelligence. The researchers conduct a thorough literature review of existing integration attempts and challenges documented in prior studies. They systematically analyze how AI interacts individually with each technology domain, examining benefits and limitations. This methodology allows them to identify cross-cutting themes and common integration hurdles. The analysis focuses particularly on critical aspects like security, routing optimization, load balancing, and resource management that emerge when attempting to orchestrate these technologies together. The researchers validate their findings by mapping identified challenges against real-world implementation examples and industry use cases.

4) Paper [4] : Mixed Integer Linear Programming for Resource Provisioning

This research developed a Mixed Integer Linear Programming (MILP) model for resource provisioning in cloud environments. The model considered multiple Infrastructure-as-a-Service (IaaS) offerings, including compute, storage, and various data transfer services. The researchers focused on combining basic services to create more sophisticated offerings for cloud consumers. They evaluated their model using a simulation of an 18-node backbone network. The methodology involved mathematical modeling, optimization techniques, and numerical simulations. MILP optimization model for multi-service resource provisioning, evaluated through network simulation.

5) Paper [5] : Quantitative Timing Model for Resource Planning

The researchers proposed a quantitative timing model for cloud resource planning. They used estimated steady-state resource usage times as a basis for their calculations. The study included developing methods to calculate speedup for parallel resource planning, with a focus on parallel matrix multiplication as an example. The researchers also proposed an application-dependent instrumentation method to measure multiple dimensions of a program's scalability. Their methodology involved mathematical modeling, algorithm development, and experimental analysis of application performance and resource utilization. Quantitative modeling of resource planning using steady-state estimates and parallel speedup calculations.

6) Paper [6] : Expert-Driven Multi-Layer Cloud Migration Process Analysis Framework.

The research methodology employed by the authors involved conducting expert interviews and focus groups with major international cloud solution providers and independent consultants. They specifically focused on investigating migration scenarios for the three primary cloud service models - SaaS, PaaS, and IaaS. The methodology included gathering insights from experts with 15-20 years of industrial experience and a minimum of 3 years in cloud migration, ensuring comprehensive coverage of real-world migration processes.

II. DEPLOYMENT SCHEME OPTIMIZATION

1) Adaptive Virtualized Resource Management (Li et al., 2020):

This approach deploys a dynamic resource management system using feedback control mechanisms. The implementation relies on KVM (Kernel-based Virtual Machine) for virtualization, allowing for fine-grained control over virtual resources. A key component is the development of a monitoring system that continuously tracks resource utilization across virtual machines. Based on this real-time data, the control system makes decisions to adjust resource allocations, primarily focusing on CPU and memory. The deployment process involves setting up the KVM environment, implementing the feedback control algorithms, and integrating the monitoring and resource adjustment components.

2) Live Virtual Machine Migration (Mostafa Vakili Fard1 , Amir Sahafi1 , Amir Masoud Rahmani2,3, Peyman Sheikholharam Mashhadi1, 2019):

Deploying a live VM migration system requires careful consideration of several factors. The first step involves implementing a comprehensive monitoring system that tracks both host and VM performance metrics. This data feeds into decision-making algorithms that determine when a VM should be migrated and which host it should move to. The deployment must also include mechanisms to perform the actual migration with minimal service disruption, which involves transferring the VM's memory state and storage. Network bandwidth becomes a critical factor, so the deployment should include network capacity planning and potentially implementing data compression techniques for migration traffic.

3) AISDCoT: An Optimized Framework for AI-Driven Cloud-IoT- SDN Integration (Mohammad Riyaz Belgaum,2019)

The paper proposes an optimized deployment framework called Artificial Intelligence Software-Defined Cloud of Things (AISDCoT) that enables seamless integration across the technology stack. This framework implements a layered architecture where AI capabilities are embedded at multiple levels - from edge devices to cloud infrastructure - to enable intelligent automation and orchestration. The deployment scheme emphasizes maintaining flexibility through software-defined approaches while leveraging cloud resources for scalability. Key optimization elements include intelligent routing algorithms for IoT device communication, AI-driven load balancing for SDN controllers, and automated resource allocation mechanisms. The framework incorporates security by design through AI-enabled threat detection and prevention across all layers. Special consideration is given to minimizing latency and optimizing quality of service through strategic placement of processing capabilities between edge and cloud.

4) Skewness-based Resource Allocation (Xiao et al., 2018)

Deploying this system starts with implementing the skewness metric calculation algorithm, which measures the imbalance in resource utilization across virtual machines. The deployment uses Xen for virtualization, requiring setup and configuration of the Xen hypervisor on all host machines. A critical component is the continuous monitoring system that feeds data into the skewness calculation. The deployment must also include mechanisms for triggering and executing VM migrations based on the skewness metrics. This involves developing algorithms to determine optimal VM placements and implementing the migration process with minimal disruption to running services.

5) Threshold-based Dynamic Resource Allocation (Lin et al., 2014):

The deployment of this system begins with setting up a robust monitoring infrastructure capable of tracking resource usage across all virtual machines and physical hosts. Thresholds for different resource types (CPU, memory, network, etc.) need to be defined and implemented in the system. The core of the deployment involves developing the mechanisms that trigger resource allocation changes when these thresholds are crossed. Using Cloud Sim for simulation and testing is a key part of the deployment process, allowing for refinement of the threshold values and allocation algorithms before live implementation. The system should also include components for dynamically adjusting thresholds based on overall system performance and utilization patterns.

6) Incremental Layer-Specific Migration Strategy with Architecture-Centric Transformation (Claus pahl,2013)

The paper emphasizes an incremental approach to cloud migration across all service levels. For SaaS, optimization focuses on data transformation and segmentation strategies. In PaaS deployments, the emphasis is on architectural transformation toward stateless components and data externalization. IaaS optimization involves careful planning of infrastructure mapping and pre-testing before production deployment, with particular attention to network topology and storage structures.

III. CASE STUDIES AND PRACTICAL APPLICATIONS

In one case study, a travel planning company utilized SaaS deployment optimization to improve response times. The company faced challenges in ensuring swift responses while managing high user demand for services such as destination recommendations and price filtering. By redistributing these services across different server nodes, the company was able to optimize the load handling of its system. This practical application highlights the importance of smart deployment strategies in SaaS environments, where balancing server loads can significantly enhance performance, especially when catering to high-demand scenarios.

Another case focuses on a cloud services provider that conducted a thorough review of resource allocation (RA) mechanisms to enhance service efficiency. With the growing complexity of cloud infrastructure, traditional RA mechanisms were found lacking, particularly in managing varying workloads and ensuring quality of service. By adopting dynamic RA approaches that accounted for fluctuating demand and hardware heterogeneity, the provider was able to improve resource utilization while reducing costs. This case illustrates the importance of continuously reviewing and updating RA strategies to meet both consumer needs and business goals. A third case study examines Amazon Prime Video’s effort to optimize infrastructure costs by shifting from a distributed microservices architecture to a monolithic system for its video monitoring service. Prime Video initially used separate services for monitoring different aspects of audio and video quality, but this setup proved expensive and inefficient. By consolidating these services into a single monolithic architecture, the company was able to reduce its monitoring costs by 90% while improving scalability and ease of management. This practical example underscores how rearchitecting systems based on performance and cost considerations can yield significant savings.

Customization in SaaS provides another interesting example of practical application. A SaaS provider enables businesses to customize their platforms by selecting from prebuilt components for their graphical user interfaces, workflows, and data storage needs. This approach allowed businesses to quickly tailor the software to their requirements without having to build solutions from scratch. The ability to select, assemble, and reuse software components resulted in faster, more efficient development cycles and higher client satisfaction. This case shows that offering customization can be a key differentiator in SaaS offerings. Finally, in the realm of cloud computing, a provider grappled with resource allocation issues due to finite hardware resources and fluctuating customer demand. To address this, the company employed advanced virtualization techniques and real-time workload management systems. These innovations allowed the provider to dynamically allocate resources based on consumer demand, ensuring that resources were used efficiently without compromising service quality. This case highlights how cloud providers can balance customer satisfaction and profit by implementing flexible, real-time resource management strategies.

It offers illustrative examples to demonstrate the application of the proposed metrics. These examples show how PRR and PC are calculated under different scenarios, such as when workload doubles or when resources are doubled without changing workload. The authors briefly mention how their proposed metrics and data mining techniques could be applied to real-world scenarios, such as finding bottlenecks and reducing test cases. These examples and suggestions hint at the practical potential of the methodology, although more extensive real-world applications would be needed to fully validate its effectiveness. Another presents numerical evaluations comparing the modified Bin-Packing and MCMF algorithms. Simulations consider scenarios with 3, 5, and 8 instance types and varying numbers of physical machines (20-200). Two main cost function scenarios are explored: an inverse cost function and random costs. Results show that the MCMF algorithm achieves optimal or near-optimal performance compared to Bin-Packing, especially for larger problem sizes. The approach demonstrates the benefits of dynamic pricing over fixed pricing in stabilizing provider costs. The method is applicable to cloud providers seeking to optimize resource allocation and pricing in dynamic environments.

IV. CHALLENGES AND FUTURE DIRECTIONS:

Cloud computing presents several complex challenges, particularly in the areas of resource allocation, scalability, and cost optimization. One of the main difficulties is managing diverse and dynamic workloads within a virtualized environment while ensuring fairness and efficiency. Traditional resource allocation techniques struggle to keep up with the ever-changing demands of cloud consumers, especially when dealing with heterogeneous hardware capabilities and fluctuating workloads. The goal of cloud providers is to maximize profit while minimizing costs for consumers, but achieving this balance is difficult given the need to meet Service Level Objectives (SLOs) for performance, availability, and security. Furthermore, external applications running in deployment environments often cause performance interference, leading to inaccuracies in response time predictions. This makes it even harder to optimize system performance and meet consumer expectations. Architecture emerges as a critical concern, requiring significant redesign to leverage cloud advantages like elasticity. Business challenges include managing changed cash flows and addressing skills gaps, particularly for IT staff who need to shift focus toward integration, configuration, and security. The paper also highlights stakeholder resistance, especially from IT specialists who may fear losing control and status when software is managed elsewhere. Developing a migration pattern catalogue that would be more specific than the general processes described. They propose that these patterns should account for organizational size, which influences needs and financial scope, and should differentiate between various SaaS application categories, PaaS development and deployment scenarios, and IaaS compute, storage, and networking requirements. This would provide more targeted guidance for specific migration scenarios while maintaining flexibility for different organizational contexts.

The key challenges identified in the integration of AI with cloud computing, IoT, and SDN center around several critical areas. The primary concern is ensuring robust security across the integrated system, particularly given the vulnerability of SDN flow tables when handling dynamically scaling IoT devices. Resource management poses another significant challenge, encompassing issues of load balancing, efficient TCAM memory utilization, and interoperability between diverse devices and protocols. The maintenance of Quality of Service metrics becomes increasingly complex when managing massive IoT data flows through SDN controllers and cloud infrastructure. Additionally, the need to optimize energy consumption and control heat emission to support green computing initiatives presents an ongoing challenge that impacts system sustainability and operational costs. Looking toward future research directions, several promising avenues emerge for advancing this integrated technology stack. A critical focus area is the development of sophisticated AI-powered routing algorithms that can efficiently manage cross-domain traffic while maintaining optimal performance. There is a pressing need for establishing unified standards and protocols to enable seamless interoperability between devices across cloud, IoT, and SDN platforms.

Research efforts should also concentrate on designing innovative deep learning architectures capable of processing distributed IoT data while minimizing storage requirements. Furthermore, the implementation of adaptive security frameworks leveraging machine learning capabilities for real-time threat detection and mitigation will be crucial for ensuring system resilience and data protection in this complex integrated environment.

Conclusion

The conclusion drawn across the document highlight several key aspects of cloud computing and its profound impact on software performance, resource management, and cost efficiency. Cloud computing is now universally accepted and applied across various domains, making the management and dynamic allocation of resources crucial due to finite availability. The focus on resource allocation in the context of cloud computing stresses that it must meet essential criteria such as Quality of Service (QoS), cost reduction, and energy efficiency. This has driven researchers and practitioners to develop various techniques for dynamic resource allocation, especially in IaaS (Infrastructure as a Service) environments, where virtual machine scheduling plays a significant role. Furthermore, deployment changes within SaaS (Software as a Service) models are shown to directly influence software response times. By analyzing the effects of these changes, performance improvements can be achieved through better deployment schemes. The results offer a guide for future deployment strategies aimed at optimizing response times, which ultimately benefits user experience and operational efficiency. The SaaS model’s unique architecture, including its lifecycle models, underlines the importance of managing tenant applications and databases, where database management systems, like Salesforce, have demonstrated the success of scalable, multi-tenant solutions. Cloud pricing models are another area of significant importance, with case studies like Prime Video and Pinterest demonstrating how models like on-demand and reserved instances can optimize costs and resource allocation. By combining pricing strategies with robust cost management practices, organizations can maximize the value of their cloud investments, reduce unnecessary expenses, and ensure flexible, scalable solutions for their needs. The future of cloud computing research includes topics such as enhancing security, privacy, energy efficiency, load balancing, and flexibility in cloud infrastructures. New avenues of research will focus on optimizing cloud environments for improved scalability, minimizing energy consumption, and maximizing both cloud service provider profits and user satisfaction. Embracing innovations in resource allocation, cloud pricing, and performance diagnostics will continue to drive advancements in the cloud computing landscape, ensuring that organizations can adapt to dynamic demands while maintaining financial and operational efficiency. This research presents an innovative method for evaluating SaaS application scalability, integrating a unique Performance/Resource Ratio metric with advanced data mining techniques. The proposed approach offers valuable insights into application performance and resource allocation strategies in cloud environments. The authors conclude that their methodology can significantly improve scalability management for SaaS applications, and indicate plans for future studies across various cloud platforms.

References

[1] Dong, Bo, et al. \"Impact analysis about response time considering deployment change of SaaS software.\" International Journal of Software Engineering and Knowledge Engineering 30.07 (2020): 977-1004. [2] Fard, Mostafa Vakili, et al. \"Resource allocation mechanisms in cloud computing: a systematic literature review.\" IET Software 14.6 (2020): 638-653. [3] Belgaum, Mohammad Riyaz, et al. \"Integration challenges of artificial intelligence in cloud computing, Internet of Things and software-defined networking.\" 2019 13th International Conference on Mathematics, Actuarial Science, Computer Science and Statistics (MACS). IEEE, 2019. [4] Tsai, WeiTek, XiaoYing Bai, and Yu Huang. \"Software-as-a-service (SaaS): perspectives and challenges.\" Science China Information Sciences 57 (2014): 1-15. [5] Parikh, Swapnil M. \"A survey on cloud computing resource allocation techniques.\" 2013 Nirma University International Conference on Engineering (NUiCONE). IEEE, 2013. [6] Pahl, Claus, Huanhuan Xiong, and Ray Walshe. \"A comparison of on-premise to cloud migration approaches.\" Service-Oriented and Cloud Computing: Second European Conference, ESOCC 2013, Málaga, Spain, September 11-13, 2013. Proceedings 2. Springer Berlin Heidelberg, 2013. [7] Kavis, Michael. Architecting the cloud: design decisions for cloud computing service models (SaaS, PaaS, and IaaS). John Wiley & Sons, Inc., Hoboken, New Jersey, 2014.

Copyright

Copyright © 2025 Prof. Manisha Patil, Supriya Kamthekar, Shon Gaikwad, Yash Lonkar, Akshaya Survase. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET67035

Publish Date : 2025-02-19

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online